Brief Information and Front Matter

The Registry of Efficacy and Effectiveness Studies seeks to register basic study information and pre-analysis plans for studies designed to establish causal conclusions. Eligible designs include randomized trials, quasi-experimental designs, regression discontinuity designs, and single case designs.

The Registry includes eight sections: General Study Information, Description of Study, Research Questions, Study Design, Sample Characteristics, Outcomes, Analysis Plans, and Additional Materials. Study data can be updated at any time. All updates will be posted along with the date and explanation for the update. Data from the Registry will be searchable and exportable.

The Registry seeks to provide timely information on causal inference studies in the social sciences that are in the planning phase, in-process, or completed in an effort to increase transparency in effectiveness studies in the social sciences.

Main Screen

Figure 1 displays the main login screen. From here, a user can click to learn more about the Registry itself (About), obtain a sample registry (Help > Sample Registry), or search by topic, grade, intervention type, design type, etc. for studies included in the Registry (Search The Registry).

To begin, click on Start a New Submission. This will lead to the main instructions page.

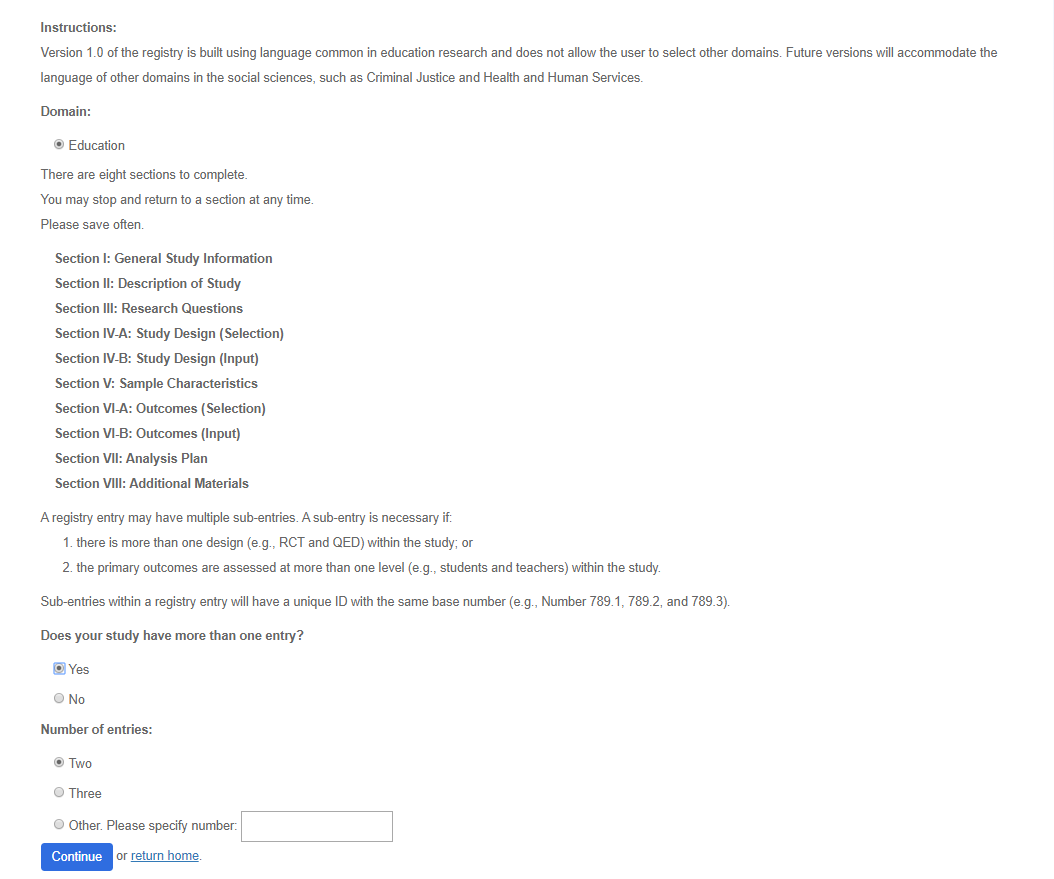

Select the primary domain in which the study takes place. The questions for the Registry are the same regardless of the domain. The only difference is the language that is used. For example, students are used in education whereas individuals are used in other domains. The current version only accommodate the language of education.

Use the guidelines to determine if a sub-entry is necessary. If so, select the number of subentries. Sections I through VIII are required for each enty. Sub-entries within a registry entry will have a unique ID with the same base number (e.g., Number 789.1, 789.2, and 789.3).

Click on continue to access the Registry for a study.

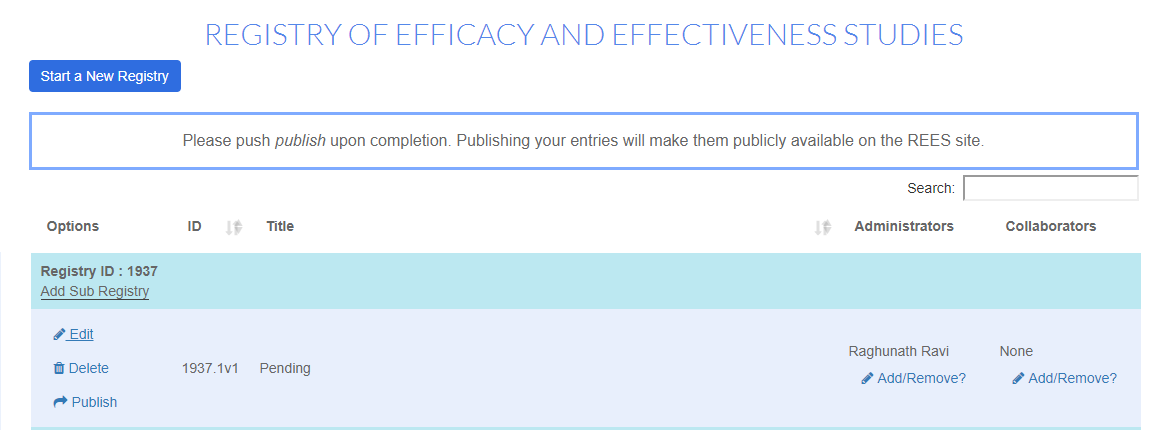

Once a study is created, it will appear on the Registry Contents Screen when a user logs into the Registry.

To continue editing a study entry, click edit.

To delete the entry, click on delete and follow the instructions.

The person who first creates the study will be listed as an administrator.

Study administrators have access to the Registry entry and can add and delete text.

Collaborators are persons who have access to view/edit the Registry but cannot publish or delete a registry.

To add/remove an administrator or collaborator, click on either Add/remove option and follow the instructions.

Once a study is published it cannot be edited. Edits must be done in a new version.

Useful tips:

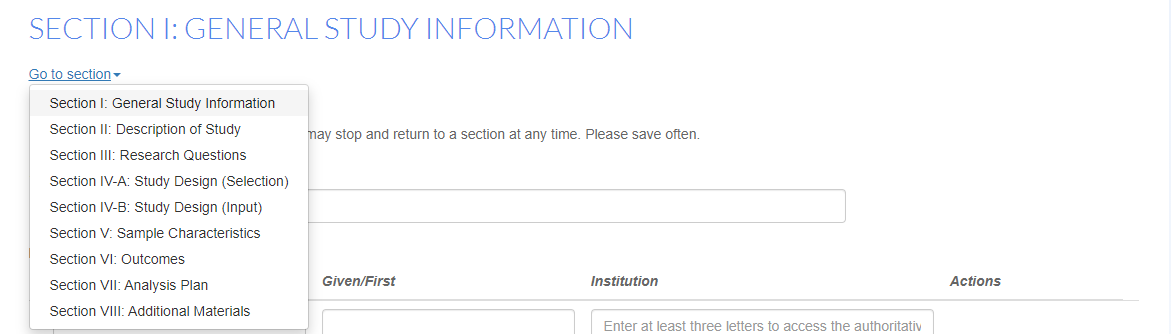

Users may skip to a section using the following:

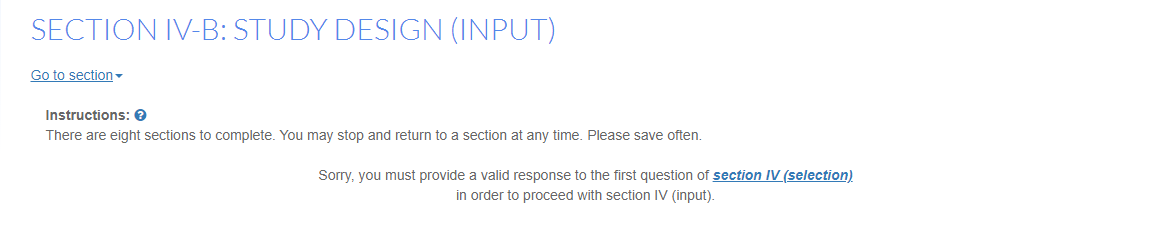

However, in some cases a section cannot be completed until information from an earlier section is complete and if a user tries to complete the latter section, an error will appear such as the one below:

The icon appears throughout and can be clicked on to provide additional instructions.

Please Save often

Guide to sections

JUMP TO

- Section I : GENERAL STUDY INFORMATION

- Section II : DESCRIPTION OF STUDY

- Section III : RESEARCH QUESTIONS

- Section IV : STUDY DESIGN

- Section V : SAMPLE CHARACTERISTICS

- Section VI : OUTCOMES

- Section VII : ANALYSIS PLAN

- Section VIII : ADDITIONAL MATERIALS

Section I: General Information

Study Title: Full title of the study.

If there is more than one PI, keep entering the data and a new row with data input fields will pop up. The last row can be left empty.

PI First Name: First Name of Principal Investigator.

PI Last Name: Last Name of Principal Investigator.

PI Affiliation: Affiliation of Principal Investigator.

Primary Funding Source: Select the primary funding source for the study. If other, please provide the name of the funder, i.e. William T. Grant Foundation, Spencer Foundation.

Award Number(s): Award number or numbers for the study.

IRB Name: Name of IRB approving the study.

IRB Approval Date: Date the study received IRB approval.

IRB Approval Number: Approval number from IRB.

Other Registration Name: Identify any other registry that houses this study.

Other Registration Date: Date of registration.

Other Registration Number: Registration number from other registry.

Study Start Date: Date the study starts. This is the same as the date the grant officially begins.

Study End Date:Date the study ends. This is the same as the date the grant is scheduled to end.

Intervention Start Date: Date the intervention starts.

Registration timing: Select the option that best describes the timing of this registry entry.

Brief Abstract: Brief abstract for the study.

Keywords: Three keywords to describe the study.

Optional Comments for Section I: Please add any additional clarifying information you would like to include for Section I.

Section II: Description of Study

Type of Intervention: Select the type of intervention. Note that more than one type may be selected in the event that the intervention crosses multiple categories.

Topic Area of Intervention: Select the topic area of the intervention. Note that more than one topic area may be selected.

Number of Intervention Arms: Select the number of treatment arms in the study, not including the comparison group. For example, if there is one intervention group and one comparison group, the number of intervention arms should be 1.

Target School Level of Intervention: Select the target school level. Note that more than one target school level may be selected.

Target School Type: Select all school types that apply. Note that more than one school type may be selected.

Location of Implementation: Select the location of implementation. Note that clicking on the US map defines the specific locations selected followed by the brief description provided by user.

Further Description of Location of Implementation: Brief description of the location of implementation, including names of states, etc.

Brief Description of Intervention Condition: Brief description of the intervention condition.

Brief Description of Comparison Condition: Brief description of the comparison condition.

Comparison Condition: Select the type of comparison condition. For either selection, include a brief description of the comparison condition.

Optional Comments for Section II: Please add any additional clarifying information you would like to include for section II.

Section III: Research Questions

State the confirmatory research question(s), one question per box:

Question regarding the primary impact that the study is designed to examine.

Note that research questions should include the following: name of intervention, counterfactual condition (business -s-usual or name of comparison condition), outcome domain, educational level of the students (criteria adopted from the National Evaluation of i3).

There may be more than one outcome measure for an outcome domain. For example, math achievement may have two outcome measures. Specific outcomes measure will be defined in Section VI.

Question 1: Write confirmatory research question 1.

Add as many confirmatory research questions as there are for the study. (A new textbox will open when you begin typing in the visible box.)

State the exploratory research question(s) at this time, one question per box:

Note that no additional information will be collected for the exploratory questions so including these is optional.

Question 1: Write exploratory research question 1.

Add as many exploratory research questions as there are for the study. (A new textbox will open when you begin typing in the visible box.)

Optional Comments for Section III: Please add any additional clarifying information you would like to include for section III.

Section IV-A: Study Design (Selection)

This section identifies the appropriate study design. The questions in Section V depend on the design selected.

Study Design: Select the appropriate design category.

Randomized Trial (RT): Study in which units are randomly assigned to condition. Units may be individuals or clusters such as classrooms or schools.

Quasi-experimental Design with Comparison Group (QED): Study in which units are not randomly assigned to condition. Units may be individuals or clusters such as classrooms or schools.

Regression Discontinuity Design (RDD): Study in which units are assigned to condition above or below an assigned cutoff or threshold.

Single Case Design (SCD): Study in which the units of analysis serve as their own control.

Optional Comments for Section IV-A: Please add any additional clarifying information you would like to include for section IV-A.

Section IV-B: Study Design (Input)

Randomized Trial

All designs here are randomized trials, or designs in which units are randomly assigned to condition. Units may be individuals or clusters of individuals. The series of questions are used to determine the specific design of the RT.

What is the unit of random assignment of the intervention: Select the unit which is randomly assigned to condition.

Did random assignment occur within sites or blocks?

Yes: This applies when units are randomly assigned to condition within a site or block. For example, a natural blocking example occurs when schools are randomly assigned to condition across multiple districts. Note that the random assignment of schools occurs within each district. An example of a case in which random assignment occurs within purposefully selected strata might be as follows: Suppose that schools in a study vary in terms of percent free and reduced lunch. Prior to random assignment, schools that are similar in terms of percent of free and reduced lunch are combined into strata. Then within each stratum, schools are randomly assigned to condition.

If yes to previous question, then you will be asked for more detail about the sites or blocks: select the definition of the sites or blocks

Please define the sites or blocks: Select the definition of the sites or blocks.

Was the probability of assignment to treatment the same across sites or blocks? (if applicable)

Select Yes if probability of assignment to treatment was the same across sites or blocks and no otherwise. Then define the probability of assignment to treatment.

Select No if probability of assignment to treatment was not the same across sites or blocks. Then describe the differing probability of assignment to treatment across blocks.

What was the probability of assignment to treatment? Please provide a reply in decimal form to move to the next question.

No: Units are not randomly assigned to condition within a sites or blocks. For example, a sample of schools is recruited for a study and all schools within the sample are randomly assigned to condition.

If no to the "sites or blocks" question, then you will be asked:

What was the probability of assignment to treatment? Please provide a reply in decimal form to move to the next question.

What is the unit at which the outcome data is measured? Select the unit of measure for the data. For example, if individual student test score data is collected for the study the unit of measure is the student. If aggregate school level data is collected for the study, the unit of measure is the school.

Optional Section: Are there any intermediate clusters between the unit of random assignment and the unit of measurement?

There may be a unit between the unit of random assignment and the unit of measurement that is an important feature of the study design. For example, schools may be the unit of random assignment and students may be the unit of measurement and classrooms may be an important level in the design. This optional section allows users to identify this intermediate level of clustering.

If yes, then please describe the intermediate level, i.e. classroom.

If no, there are no further questions.

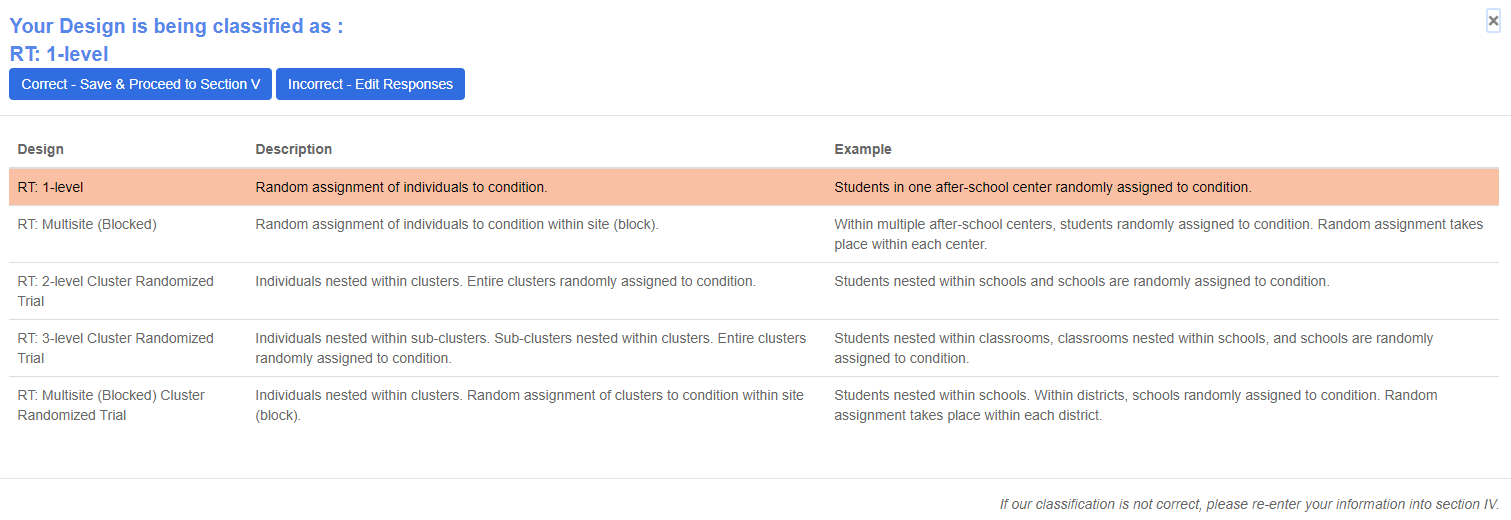

Verify My Design: This allows the user to interact with the REES to verify the design classification for the study.

If Correct, then proceed to the next section.

If Incorrect, please edit responses in Section IV-B to match the study design.

The highlighted row corresponds to the classification based on the user responses.

Optional Comments for Section IV-B: Please add any additional clarifying information you would like to include for section IV-B.

Quasi-experimental Design with comparison group (QED)

All designs here are quasi-experimental designs with a comparison groups, or designs in which units are treatment units are matched to similar comparison units. Units are not randomly assigned to condition. Units may be individuals or clusters of individuals. The series of questions are used to determine the specific design of the QED.

What is the unit at which the intervention is implemented: Select the unit which the intervention is being implemented.

Did implementation occur within blocks or sites?

Yes: This applies when treatment and comparison units are implemented within a block or site. For example, a natural blocking example occurs when schools are allocated to condition across multiple districts. Note that the allocation of schools occurs within each district. An example of a case in which allocation occurs within blocks or sites might be as follows: Suppose that schools in a study vary in terms of percent free and reduced lunch. Prior to allocation, schools that are similar in terms of percent of free and reduced lunch are combined into strata. Then within each stratum, schools volunteer to participate in the treatment. Those that do not volunteer are candidates for school level matches within the district.

No: Units are not allocated to condition within a block or site. For example, a sample of schools is recruited for a study and all schools within the sample are allocated to condition regardless of district.

If yes to previous question, then you will be asked for more detail about the blocks or sites: select the definition of the blocks or sites.Please define the natural blocks or sites: Select the definition of the blocks or sites

What is the unit at which the outcome data is measured? Select the unit of measure for the data. For example, if individual student test score data is collected for the study the unit of measure is the student. If aggregate school level data is collected for the study, the unit of measure is the school.

Optional Section: Are there any intermediate clusters between the unit of implementation and the unit of measurement?

There may be a unit between the unit of implementation and the unit of measurement that is an important feature of the study design. For example, schools may be the unit of implementation and students may be the unit of measurement and classrooms may be an important level in the design. This optional section allows users to identify this intermediate level of clustering.

If yes, then please describe the intermediate level, i.e. classroom.

If no, there are no further questions.

Upon clicking save and continue, you will be asked to verify your design.

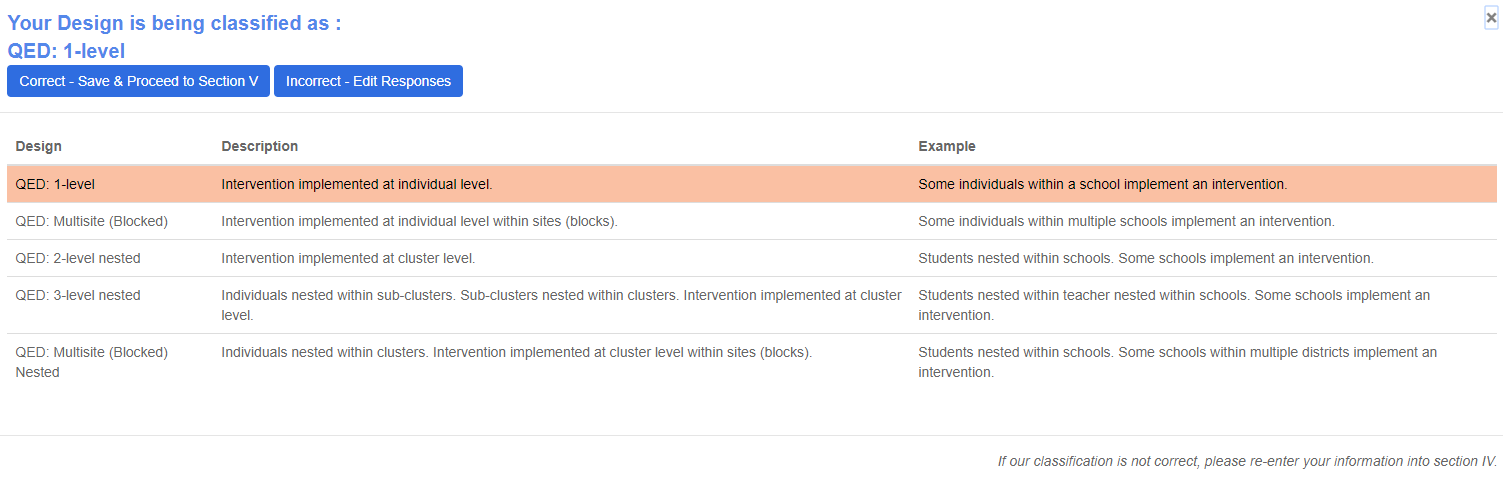

Verify My Design: This allows the user to interact with the REES to verify the design classification for the study. The designs are classified as shown in Figure 9.

If Correct, then proceed to the next section.

If Incorrect, please edit responses in Section IV-B to match the study design.

The highlighted row corresponds to the classification based on the user responses.

Study Design: Matching Procedures: If your study uses matching, please complete the given questions and/or describe the matching procedures in the optional comments box at the end of this section.

Optional Comments for Section IV-B: Please add any additional clarifying information you would like to include for section IV-B.

Regression Discontinuity Design (RDD)

All designs in this section are regression discontinuity designs, or designs in which units are assigned to condition based on a cut score. Units may be individuals or clusters of individuals. The series of questions are used to determine the specific design of the RDD.

What is the unit of assignment of the intervention: Select the unit which the intervention is being implemented.

Did assignment of units occur across multiple sites: Select yes if this was a multi-site intervention.

What is the unit at which the outcome data are measured? Select the unit of measure for the data. For example, if individual student test score data is collected for the study the unit of measure is the student. If aggregate school level data is collected for the study, the unit of measure is the school.

Optional Section: Are there any intermediate clusters between the unit of random assignment and the unit of measurement?

There may be a unit between the unit of assignment and the unit of measurement that is an important feature of the study design. For example, schools may be the unit of assignment and students may be the unit of measurement and classrooms may be an important level in the design. This optional section allows users to identify this intermediate level of clustering.

If Yes, then please describe the intermediate level, i.e. classroom.

If No, there are no further questions.

Upon clicking save and continue, you will be asked to verify your design.

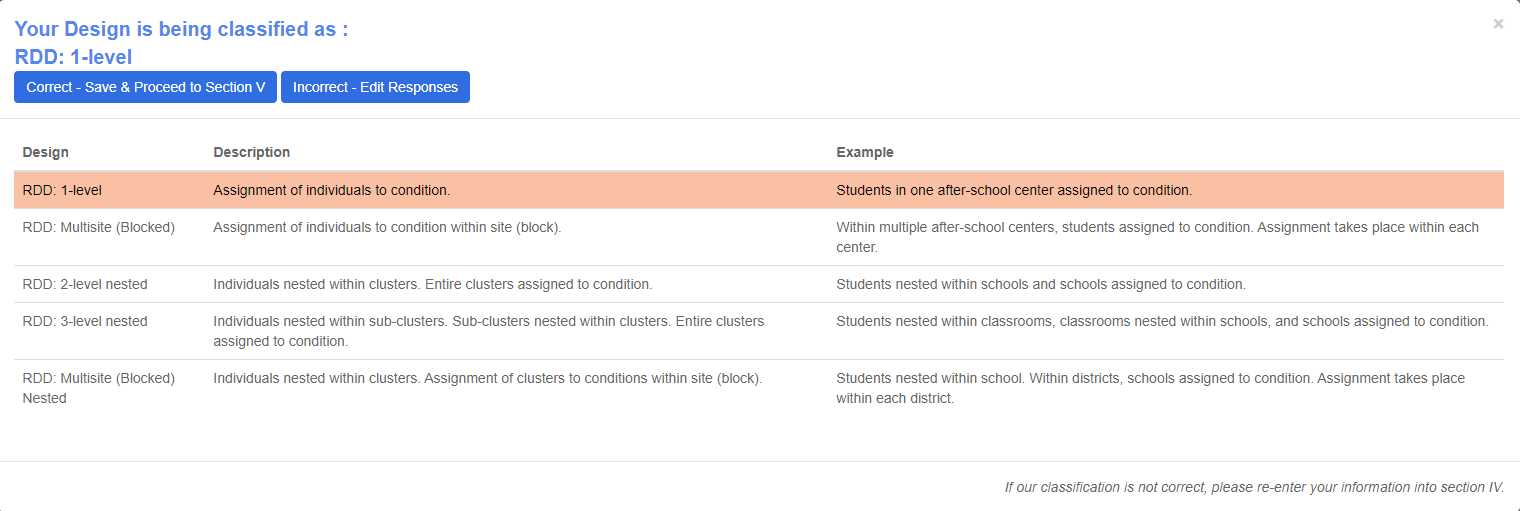

Verify My Design: This allows the user to interact with the REES to verify the design classification for the study. The designs are classified as shown in Figure 10.

If Correct, then proceed to the next section.

If Incorrect, please edit responses in Section IV-B to match the study design.

The highlighted row corresponds to the classification based on the user responses.

What is the assignment variable: Please enter the name of the assignment variable.

Describe how the assignment variable is constructed: Please describe how the assignment variable is constructed.

What is the scale associated with the assignment variable: Please select continuous or ordinal (discrete).

What is the approximate distribution of the assignment variable: Please enter the minimum, maximum, and mean.

Who determined the value of the assignment variable for each unit: Please select the appropriate response, providing additional relevant information as appropriate.

Who will select the cutoff for the assignment variable: Please select the appropriate response, providing additional relevant information as appropriate.

At what time point will the cutoff value be established: Please select the appropriate response, providing additional relevant information as appropriate.

What type of RD is the design: Please select the appropriate response, providing additional relevant information as appropriate.

What methods will be used to assess discontinuity in probability of treatment at the cutoff: Please select the appropriate response(s), providing descriptions of statistical tests, as appropriate.

What methods will be used to assess continuity in potential outcomes at the cutoff: Please select the appropriate response(s), providing descriptions of graphical analyses, statistical tests, or other methods, as appropriate.

Optional Comments for Section IV-B: Please add any additional clarifying information you would like to include for section IV-B.

Single Case Design (SCD)

Study Design: Select the appropriate design.

Based on the response above further questions are asked to determine the SCD sub design.

Did your study include any of the following types of a priori randomization? Select a type of priori randomization from the list (if applicable).

Who are the participants: Select the appropriate participants, using the boxes to further specify participants.

Optional Comments for Section IV-B: Please add any additional clarifying information you would like to include for section IV-B.

Section V: Sample Characteristics

Section V differs depending the classification of the study design in section IV. Specific questions are tailored to the particular study design.

For RCTs, QEDs, and RDDS, this section asks for details related to:

- The relevant sample sizes at all levels

- Inclusion and exclusion criteria at all levels

For SCDs, this section asks for details related to:

- Inclusions and exclusion criteria for participants

- The recruitment method

Note that there is an optional comment box in this section for users to add any additional clarifying information.

Section VI: Outcomes

For each confirmatory research question specified in Section III a set of questions (Outcomes) are asked in this section.

Each Outcome can have more than one outcome measure that can be added by pressing the "Add additional outcome measure for confirmatory question (n)" button at the end of each outcome.

Note that the same set of questions will be repeated for each outcome measure if more than one measure is added.

Select the outcome domain: Select the outcome domain that best describes the outcome.

Please report the minimum detectable effect size (MDES) or the smallest effect size the study can detect.

Name of outcome measure: Provide the full name of the outcome measure.

What is the scale associated with the outcome measure? Select the scale for the outcome measure.

Is the outcome from a normed or state test?

Yes: Select yes if outcome is from a normed or state test.

No: Select no if outcome is not from a normed or state test. In this case, the following questions will appear.

Test-retest reliability: Provide the test-retest reliability (if known).

Internal consistency: Provide the internal consistency reliability (if known).

Inter-rater reliability: Provide the inter-rater reliability (if known).

Will the same outcome measure be collected in the treatment and comparison group?

Yes: Select yes if same outcome measure collected in both groups.

No: Select no if same outcome is not collected in both groups. If no, please describe.

Name of outcome measure: Provide the full name of the outcome measure.

What is the type of outcome measure? Select the type of outcome measure.

What is the type of measurement system for the outcome measure? Select the type of measurement system for the outcome measure.

Please describe how inter-assessor agreement will be assessed? Describe how inter-assessor agreement will be assessed.

Please provide additional relevant information. Add any additional relevant information.

Optional Comments for Section VI: Please add any additional clarifying information you would like to include for section VI.

Section VII: Analysis Plan

Section VII differs depending on the classification of the study design in section IV. Specific questions are tailored to the particular study design.

For RCTs and QEDs, this section asks for details related to:

- Availability of baseline data

- Intended covariates for inclusion in the the model at all levels

- Description of analytic model

- Plans for handling missing outcome data

- Plans for adjusting for multiple comparisons in cases with more than one outcome measure in an outcome domain

For RDDs, this section asks for details related to:

- Procedures for selecting the bandwidth around the cutoff value

- Procedures for assessing the robustness of the bandwidth

- Procedures for determining the functional form of the relationship between the outcome variable and the assignment variable

- Procedures for assessing the robustness of the functional form

- Intended covariates for inclusion in the model at all levels

- Description of the analytic model

- Plans for handling missing outcome data

- Plans for adjusting for multiple comparisons in cases with more than one outcome measure in an outcome domain

For SCDs, this section asks for details related to:

- Details of planned visual analysis of graphed data (if applicable)

- Procedures to assist in decision making

- Non-parametric non-overlap effect sizes used

- Parametric effect sizes used

- Analysis approaches used

- Additional details for the selected analyses

Note that there is an optional comment box in this section for users to add any additional clarifying information.

Section VIII: Additional Materials

This section provides space for attaching additional information AND/OR providing a link to an external source. Materials may include the following:

Prior to Completion of Study:

- Study Proposal

- Sampling Plan

- Links to Study Website

- Logic Model

- Fidelity Measure

- Power Analysis

- Measures (instruments) to be used

Links to Study Website:

- Link to Published or Unpublished Papers

- Link to Study Reports

- Link to dataset

- Link to WWC review

Optional Comments for Section VIII: Please add any additional clarifying information you would like to include for section VIII.